There are three technologies dedicated to bringing faster, simpler, cheaper, and smarter artificial intelligence. High-performance computing is available today, and quantum computers and neural mimetic computing networks are about to enter commercialization. The latter two technologies will revolutionize AI and deep learning.

There are three problems with artificial intelligence and deep learning.Time: It takes weeks to train deep networks such as CNN and RNN, which does not include the time required to define problems and deep network programming to achieve usable performance.

Cost: Calculating continuously with hundreds of GPUs is very expensive. Hiring 800 GPUs from Amazon's cloud computing service costs about $120,000 a week. This does not include labor costs. An artificial intelligence project can take up to a few months or more of high-level talent costs.

Data: In many cases, not having enough data to mark a project is difficult to succeed. There are many good ideas, but the price of training data is too high.

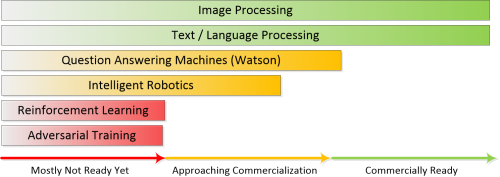

Areas that have made great strides in business, mainly involving image processing, text or speech recognition, many of these startups have used the excellent image and speech model APIs of companies such as Google, IBM, and Microsoft.

Three tracks of artificial intelligenceIf you focus on this area, you will find that we have started using CNN and RNN. The development beyond these applications has just emerged, and the next wave of progress will come from the generation of hostile networks (GANs) and Reinforcement Learning, as well as QAMs such as Watson.

This is the most common way we can promote artificial intelligence. Using an increasingly complex deep neural network, the architecture is different from the now common CNN and RNN.

In fact, the future may be completely different. What we see is a three-way competition for the future of artificial intelligence based on completely different technologies. They are:

1, high performance computing (HPC)

2. Neural mimetic calculation (NC)

3. Quantum Computing (QC)

Among them, high performance computing is the main focus of attention today. Competition between chip makers and vendors such as Google is accelerating the development of deep learning chips. Neurometic calculations (pulsed neural networks) and quantum computing seem to take years to develop. In fact, neuro-like chips and quantum computers have been put into the commercial use of machine learning.

Both hot and cold, these two new technologies will directly subvert the path of artificial intelligence, which is a good thing.

High performance computingAt present, the most concerned is high performance computing. High-performance computing makes existing deep neural network structures faster and easier to access.

Basically this means two things: a better general-purpose environment, such as TensorFlow, better use of GPUs and FPGAs in larger data centers, and the emergence of more specialized chips.

AI's new business model is "open source." In the first half of 2016, basically all major AI players open source their AI platform. They are investing heavily in data centers, cloud services and artificial intelligence intellectual property. The strategy behind open source is simple, and the most users of the platform win.

Although Intel, NVIDIA and other traditional chip makers are meeting the new demands of GPUs, giants such as Google and Microsoft are making their deep learning platforms faster and more attractive by developing proprietary chips.

TensorFlow is Google's powerful universal solution, the latest release of proprietary chip TPU, TensorFlow and the combination to achieve better results.

Microsoft has been selling non-proprietary FPGAs and released version 2.0 of the Cognitive Toolkit (CNTK). CNTK provides a Java API that integrates directly with Spark. It supports Keras code so that users can easily migrate from Google. CNTK is reported to be faster and more accurate than TensorFlow and provides a Python API.

Integrating Spark will continue to maintain important drivers. Yahoo has brought TensorFlow to Spark. Datakicks, Spark's main commercial provider, has launched an open source package that combines deep learning with Spark.

The key drivers here address at least two of the three barriers. These improvements make programming faster and easier, resulting in more reliable and good results, especially faster chips that make the machine's computation time shorter.

Neural mimetic calculation (NC) or pulsed neural network (SNN)

Neural mimetic calculations or pulsed neural networks are paths to strong artificial intelligence, based on the principles of brain operation, which is quite different from current deep neural networks.

The researchers observed that not all neurons in the brain activate every time. The neuron sends a selective signal on the link, and the data is actually encoded in the signal in a certain pulse. In fact, these signals consist of a series of pulses, so the researchers encode based on the amplitude, frequency, and delay of the signal.

In existing deep neural networks, each neuron is activated every time according to a relatively simple activation function.

Neural mimetic calculations have had several significant improvements over current deep learning neural networks.

1. Since not all "neurons" are activated every time, a single SNN neuron can replace hundreds of neurons in a traditional deep neural network, resulting in higher efficiency in terms of power and volume.

2. Early examples show that neural mimetic calculations can be learned from the environment using unsupervised techniques (without labeled examples) and can be learned quickly with very few samples.

3. Neuromorphic computing can be learned and applied to another environment from one environment. Can be remembered and summarized, this is the ability to truly break through.

4, the neural mimicry calculation is more energy efficient, opening up a path of miniaturization.

This fundamental architectural change can solve the three basic problems facing deep learning today.

Most importantly, you can now purchase and use a neural mimetic pulse neural network system. This is not a distant technology.

BrainChip Holdings has launched a commercial security surveillance system in one of Las Vegas' largest casinos and announced other applications to be delivered. In Las Vegas, its function is to automatically detect dealer errors by monitoring the video of the camera. Learn the rules of gambling completely by observation. BrainChip is a publicly traded company on the Australian Stock Exchange that has launched a series of casino monitoring products.

Quantum computingAbout the fact that quantum computing you may not know:

Quantum computing is already available. Lockheed Martin has been in commercial operation since 2010. Several other companies are launching commercial applications based on D-Wave quantum computers, the first quantum computer to be introduced to the commercial market. D-Wave hopes to double the size of quantum computers every year

In May of this year, IBM announced the launch of IBM Q, a commercial computer product for quantum computers. This is a cloud-based subscription service that undoubtedly greatly simplifies access to these expensive and complex machines. IBM said that so far, users have run 300,000 experiments.

Google and Microsoft are expected to release their commercial quantum computers in the next two to three years, just like many independent institutions and academic institutions.

D-Wave and a number of independent researchers have introduced open source programming languages ​​that make programming quantum devices easier.

Quantum computers excel at various types of optimization problems.

According to Google's 2015 research report, D-Wave quantum computers are 108 times more powerful than traditional computers and 100 million times faster. Google Engineering Director Hartmut Nevan said: "D-Wave can do in 1 second, the traditional computer needs 10,000 years.

Quantum computing represents the third path of strong artificial intelligence and overcomes speed and cost issues.

How will the future develop?Both neuromorphic and quantum computations have potential. Seen from the timeline. High-performance computing will continue to evolve in the next few years, however, many laboratories and data centers use more advanced quantum computers and neural mimetic calculations.

Deep learning platforms are evolving, such as Google TensorFlow and Microsoft CNTK, and other platforms are also trying to get users. With the development of quantum computing and neural mimicry, the platform will be gradually adopted.

Quantum computers will completely eliminate time barriers, cost barriers will also be reduced, and new types of machine learning will emerge in the future.

My personal opinion is that quantum computing and neural mimetic computing are very similar to the situation in 2007, when Google's distributed data storage system Big Table became open source Hadoop. We didn't know how to use it at first, but after three years Hadoop dominated the entire data science.

Artificial intelligence - the hottest label in 2017. For many AI testers, do you know how to balance technology and demand? Do you know how to use the policy to get twice the result with half the effort? Do you know how to find a company's investment in Bole? On December 14th, “2017European Innovator Annual Meeting·AI Industry Application Summitâ€, we will invite many investors, entrepreneurs, and AI field experts to discuss together, not only AI+ industry + application, here is the demand side and technology supply. The party's communication platform is a platform for communication between policy experts and enterprises. It is a platform for mutual exchange between investors and enterprises. It is a platform for the exchange of graduates and enterprises. Multi-dimensional, deeper, come here to implement your own AI!

Incremental encoders provide speed, direction and relative position feedback by generating a stream of binary pulses proportional to the rotation of a motor or driven shaft. Lander offers both optical and magnetic incremental encoders in 4 mounting options: shafted with coupling, hollow-shaft, hub-shaft or bearingless. Single channel incremental encoders can measure speed which dual channel or quadrature encoders (AB) can interpret direction based on the phase relationship between the 2 channels. Indexed quadrature encoders (ABZ) are also available for homing location are startup.

Incremental Encoder,6Mm Solid Shaft Encoder,Hollow Rotary Encoder,Elevator Door Encoder

Jilin Lander Intelligent Technology Co., Ltd , https://www.jilinlandertech.com